Unmasked: AI's Sneaky Job Market Infiltration - Companies Sound the Alarm

Companies

2025-04-23 21:39:38Content

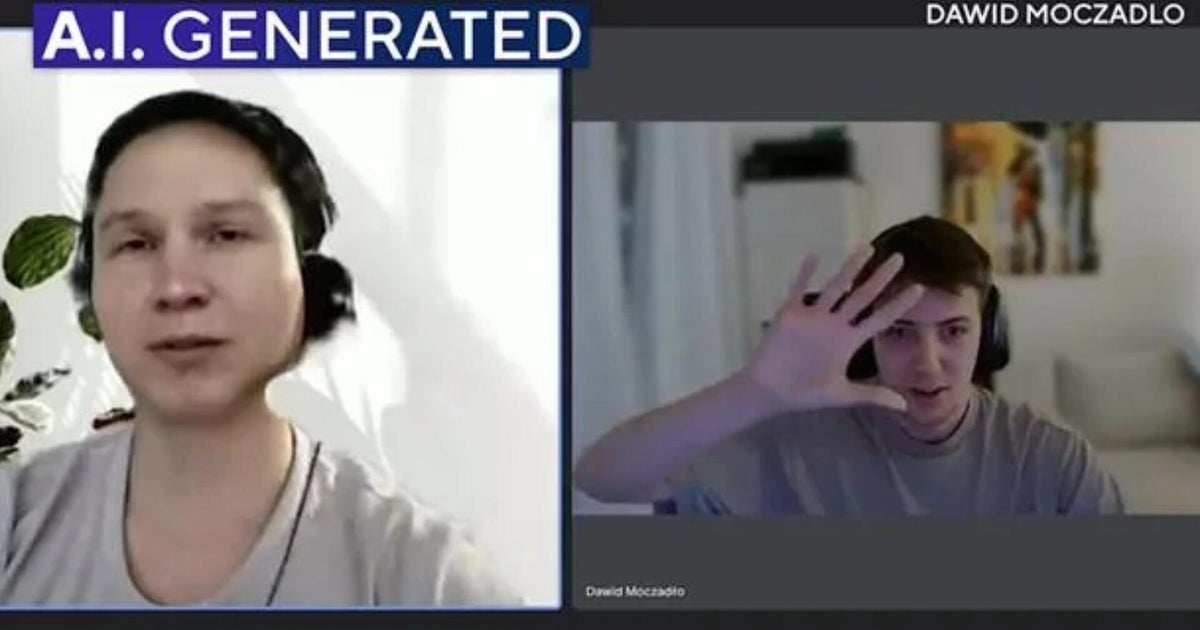

In today's rapidly evolving job market, a new and unsettling trend is emerging: the rise of artificial impersonators in the workplace. Imagine sitting next to a colleague and wondering, "Are they even real?" This scenario is no longer just a plot from a sci-fi movie—it's becoming a genuine concern for employers and job seekers alike.

Companies are now facing an unprecedented challenge as sophisticated employment scams target both sides of the hiring landscape. Fake job applicants, powered by advanced AI technologies, are infiltrating recruitment processes, creating a complex web of digital deception.

CBS News Confirmed executive producer Melissa Mahtani sheds light on this growing phenomenon, highlighting how organizations are scrambling to develop robust verification methods to distinguish between genuine human candidates and AI-generated imposters.

The stakes are high: these scams not only threaten the integrity of hiring processes but also pose significant risks to company security and workforce authenticity. As technology continues to blur the lines between human and machine, employers must stay vigilant and innovative in their screening techniques.

What was once a far-fetched concept is now a pressing reality—your next coworker might just be a sophisticated algorithm in disguise.

The Rise of AI Imposters: Unmasking the Digital Deception in Job Markets

In an era of unprecedented technological advancement, the job market is facing a new and insidious challenge that threatens the very fabric of professional recruitment. Artificial intelligence has evolved from a promising technological tool to a potential weapon of mass employment disruption, creating a landscape where distinguishing between human and machine applicants has become increasingly complex and fraught with risk.Unraveling the Digital Workforce Impersonation Epidemic

The Emerging Landscape of AI-Driven Employment Fraud

The digital transformation of recruitment processes has inadvertently created a breeding ground for sophisticated AI-powered impersonation strategies. Cutting-edge algorithms now possess the capability to generate hyper-realistic job applications, complete with nuanced professional backgrounds, meticulously crafted resumes, and seemingly authentic communication patterns. These digital doppelgängers are designed with such precision that they can seamlessly navigate complex hiring systems, presenting an unprecedented challenge for human resources professionals and organizational security protocols. Companies are discovering that these AI-generated personas are not merely simple chatbots or rudimentary automated systems. Instead, they represent highly advanced technological constructs capable of mimicking human communication with remarkable sophistication. Machine learning models can now analyze thousands of professional profiles, synthesize contextually appropriate language, and generate application materials that are virtually indistinguishable from genuine human submissions.Technological Arms Race: Detection and Prevention Strategies

Organizations are rapidly developing multilayered defense mechanisms to combat this emerging threat. Advanced verification technologies, including behavioral biometric analysis, deep learning pattern recognition, and real-time communication authenticity checks, are being deployed to identify and neutralize AI-generated job applications. Cybersecurity experts are collaborating with machine learning researchers to develop increasingly complex screening algorithms. These systems analyze multiple data points, including writing style consistency, background narrative coherence, and subtle linguistic nuances that might reveal an artificial origin. The goal is to create a robust technological immune system capable of distinguishing between genuine human applicants and their algorithmic counterparts.Ethical and Psychological Implications of AI Job Market Manipulation

Beyond the technological challenges, the rise of AI job application impersonation raises profound ethical and psychological questions. The potential for widespread employment fraud threatens not just individual organizations but the fundamental trust mechanisms that underpin professional recruitment ecosystems. Job seekers and employers alike are experiencing heightened anxiety about the authenticity of professional interactions. The psychological impact of knowing that any interaction could potentially be with an AI construct creates a pervasive sense of uncertainty and erosion of interpersonal trust. This technological uncertainty extends beyond mere recruitment, potentially reshaping fundamental perceptions of professional communication and human-machine interactions.Global Regulatory Responses and Future Outlook

International regulatory bodies are beginning to recognize the critical need for comprehensive frameworks addressing AI-driven employment fraud. Proposed legislation aims to establish clear guidelines for AI application verification, mandate transparent disclosure of AI-assisted recruitment technologies, and create legal mechanisms to prosecute sophisticated digital impersonation attempts. The future of employment verification will likely involve a complex interplay between advanced technological detection systems, stringent regulatory oversight, and evolving ethical standards. As AI continues to advance, the battle between technological innovation and security will remain a dynamic and critical arena of professional development.RELATED NEWS

Companies

The FDA's Secret Ingredient: How Food Companies Slip Past Safety Checks

2025-02-23 13:00:01

Companies

Corporate Comedy Catastrophes: When April Fool's Jokes Went Terribly Wrong

2025-03-31 17:58:55

Companies

Behind Closed Doors: Trump-Putin Call Hints at Deals, But U.S. Businesses Face a Radically Transformed Russian Landscape

2025-03-19 15:11:01